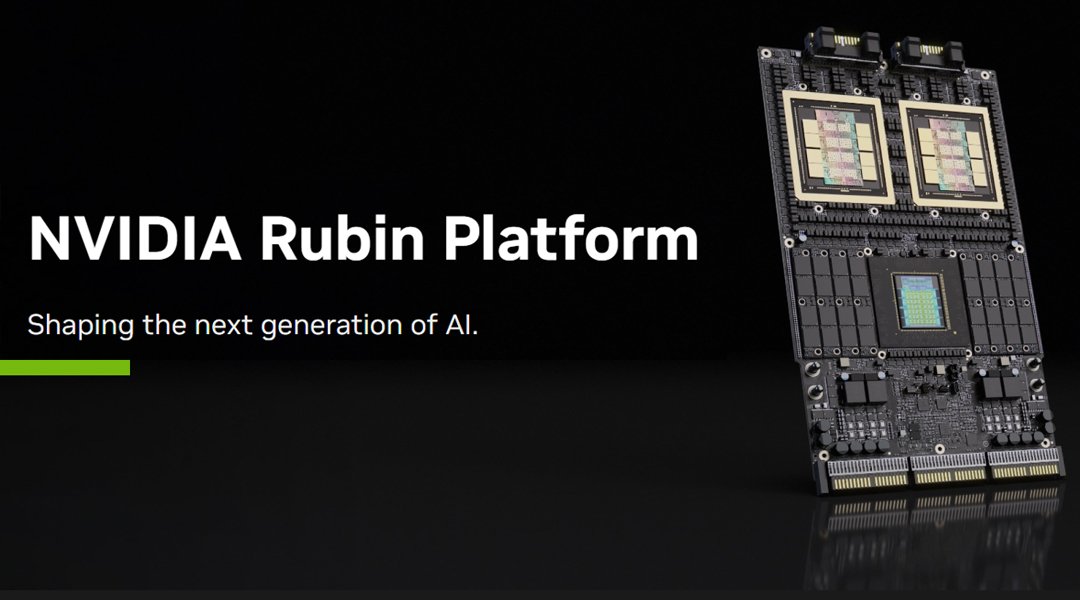

At a critical juncture where the demand for AI computing power is skyrocketing and the competitive landscape is undergoing dramatic changes, Nvidia has not rested on the laurels of Blackwell, but has instead launched its next-generation platform, “Rubin,” at an even faster pace. This is not a simple chip iteration, but a systemic leap aimed at redefining the rules of competition.

Background of Launch: Facing Encirclement and Suppression at the Peak

Rubin’s creation stemmed from triple core pressure:

1. Evolution of Demands: While the scale of AI models continues to grow, the bottleneck is shifting from simple computing power to **memory capacity, bandwidth, and energy consumption**. The training and real-time inference of giant models require a revolutionary memory system.

2. Elevated Competition: Competitors are shifting from “challenging single-point performance” to “building alternative ecosystems.” AMD is vying for customers with its open ROCm and high-bandwidth memory solutions; cloud giants (Google TPU, AWS Trainium ) are seeing their self- developed chips mature; and numerous startups are seeking breakthroughs in specific architectures ( such as in-memory computing ).

3. Self-renewal: To maintain its “generational” advantage, Nvidia must move beyond the scope of “GPU chips” and shift to full-scale competition in the “computing platform” field, extending its leading advantage from chips to the entire stack of interconnect, systems, and software .

Core Advantage: Consolidating the Throne with System-Level Barriers

Rubin’s core competitiveness lies in transforming its hardware advantages into an unshakeable system-level ecosystem barrier.

| Comparison Dimensions | Nvidia Rubin (expected) | Major competitors (such as AMD MI300X) | Impact and significance |

| Core Architecture | The next-generation GPU core focuses on dynamic optimization and energy efficiency improvement for AI workloads. | The mature CDNA architecture employs a CPU+GPU fusion design. | It’s not about generational disruption, but rather continuous improvement and optimization. |

| Memory system | Expected key breakthrough : The adoption of a new generation of HBM (such as HBM4) may achieve a leap in capacity and bandwidth , with the goal of directly addressing the “memory wall”. | The adoption of high-capacity HBM3 is a current advantage. | The main battleground has shifted : the focus of competition has shifted from TFLOPS to whether it is possible to accommodate and quickly access ultra-large models on a single card/node. |

| Internet technology | Key Advantages : The next-generation NVLink and NVLink Switch offer significantly higher chip-to-chip interconnect bandwidth and topology flexibility than industry standards. | Relying on open standards such as Infinity Fabric results in gaps in bandwidth and flexibility. | Define the upper limit of cluster size : This determines the efficiency of clusters with more than 10,000 GPUs and is the absolute threshold for ultra-large-scale training. |

| Software ecosystem | The ultimate moat : CUDA and full- stack software ( TensorRT , Triton, etc.) have built a complete system covering development, deployment, and optimization, with unparalleled maturity and richness. | ROCm , it is still in a catching-up position in terms of toolchain depth, enterprise support, and community inertia. | Locking in users and the future : Migration costs are extremely high, and user inertia is a more solid defense than hardware performance. |

| Platform Strategy | System-level delivery : Provides a full- stack reference design from chips and switches to racks , reducing deployment complexity. | They focus primarily on the chip level, relying on partners for system integration. | Enhance customer loyalty : Transform from a supplier into a strategic infrastructure partner, providing turnkey solutions. |

Market Impact: Accelerated Differentiation and Ecosystem Showdown

The launch of Rubin will have a profound impact on the market landscape:

1. Raising the bar for competition: The competition has been upgraded from “chip manufacturing” to “system manufacturing,” placing extreme demands on competitors’ capital, technology, and ecosystem integration capabilities.

2. Promote market segmentation:

Pyramid Top (Hyperscale Training/Research): Oligopolistic Competition Between Nvidia and a Few Players (such as AMD and Cloud Giants).

Main Market (Industry Model Training and Inference): More dedicated or semi-dedicated solutions based on cost-effectiveness and specific scenarios (such as video processing and autonomous driving) are emerging.

3. Intensifying the Ecosystem Battle: This will force competitors like AMD to increase their investment in software and developer communities, and may also spur stronger demand for open interconnect standards (such as Ultra Ethernet) to counter NVLink ‘s closed ecosystem.

Industry Trends: Representing the Present, But Not Necessarily Defining the Future

Rubin accurately represents the peak of AI chip development under the current mainstream technology path:

Representative Trends: The ultimate pursuit of memory bandwidth, the diversification of computing precision (FP8), and the vertical integration of “chip-system-software”.

Undefined Trends: The longer-term future belongs to **architectural innovation**. Revolutionary paths such as neuromorphic computing, in-memory computing , and photonic computing are still developing, and they may fundamentally change the paradigm of AI computing. Rubin represents the pinnacle of the von Neumann architecture, but it may not be the final form.

Applicable Scenarios

Rubin is a weapon designed for “computing power limit challengers”:

1. Cutting-edge AI R&D: Full-process training of next-generation trillion -parameter multimodal large models.

2. National-level computing infrastructure : Construct national or regional strategic AI computing infrastructure .

3. Hyperscale cloud services : Top cloud vendors use AI-as-a-service products with the highest performance.

4. Complex Scientific Computing: Fields requiring ultra-high double-precision computing power, such as climate simulation, gene sequencing, and high-energy physics .

Procurement and Strategic Recommendations

Decision-making should be based on a clear self-positioning:

1.Immediate Follow-up and Full Commitment: Applicable to leading technology companies or national laboratories that must maintain an absolute leading edge in AI models. When budget allows, priority should be given to ensuring early access to and deployment of the Rubin platform.

2. Evaluate cost-effectiveness and consider a hybrid architecture: For most enterprises, a calm assessment is needed: Can the Blackwell or even Hopper platform meet the needs of the next 2-3 years? A hybrid architecture of “Rubin core + other supplements” can be adopted , using Rubin to tackle key models and other solutions to handle inference and development tasks.

3. Strategic Avoidance of Single-Holding: Even if you mainly rely on NVIDIA, you should maintain a certain degree of flexibility at the software level (such as making more use of high-level APIs of open-source frameworks like PyTorch ) to leave room for future ecosystem diversification.

4. Focus on energy efficiency and total cost of ownership: Beyond peak computing power, it’s crucial to assess the effectiveness of computing power per dollar and computing power per watt, which is essential for large-scale deployments.

Represents a Strategic Offensive, Elevating Competition

NVIDIA Rubin represents a strategic offensive, elevating competition to the system level and attempting to solidify an empire that cannot be shaken by mere breakthroughs in chip performance. For the industry, it signifies another refresh of the current technological pinnacle in computing power and heralds a new phase for the AI computing market : a phase with deeper ecosystem moats, higher technological barriers, and simultaneously, a phase that incentivizes more diverse underlying innovation. Choosing Rubin is not just choosing hardware; it’s choosing to integrate into a computing ecosystem that defines an era.

More Articles for the Topic

Amazon Trainium 3 Disrupts the AI Chip Market: Reshaping the Landscape and Rational Competition

Tesla Takes on Nvidia: Can Musk’s AI Chips Dethrone the Silicon King?

AI Chip Battle Among NVIDIA, AMD, Intel and More Competitors