For years NVIDIA’s DGX systems have been synonymous with ready-to-deploy, high-performance AI infrastructure: purpose-built servers, tightly integrated software, and a steady stream of GPU improvements that make them attractive to research institutions, cloud partners, and enterprises training large models. But the AI infrastructure market is evolving fast — specialized chips, hyperscalers building custom accelerators, and strong OEM offerings are all contesting the same territory. Here’s a clear assessment of whether DGX can stay at the top.

Why DGX still begins with a sizable edge

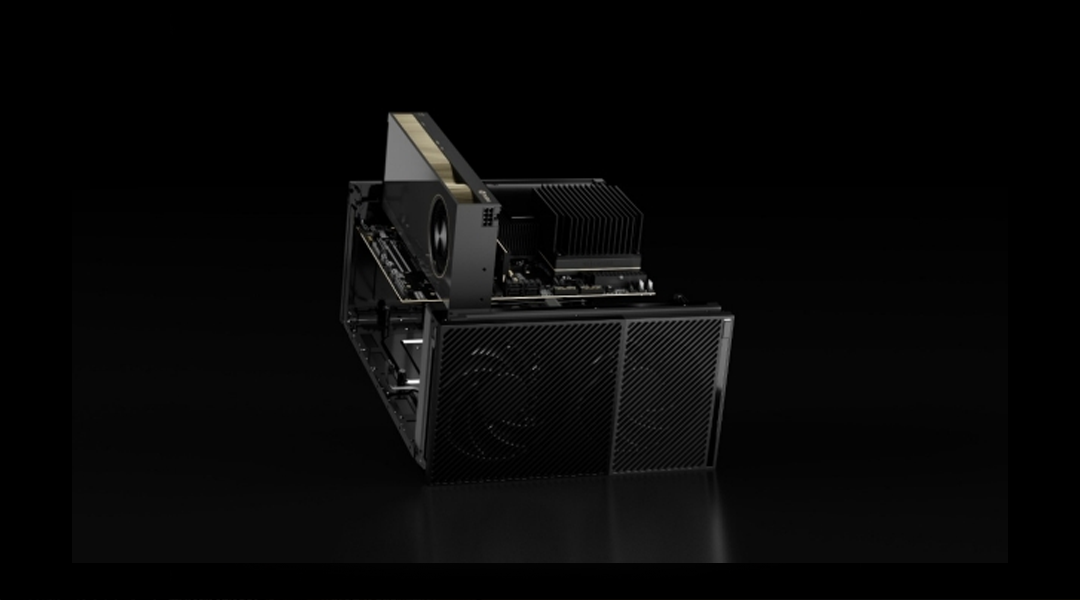

DGX is more than just hardware; it’s a cohesive ecosystem. NVIDIA bundles powerful GPUs, high-bandwidth NVLink networks, tuned system software, and a broad set of developer tools (CUDA, cuDNN, Triton, NVIDIA AI Foundations, DGX Cloud integration). That integrated package reduces the time and effort customers spend integrating components and creates real inertia against switching to other platforms.

NVIDIA also continues to post performance milestones: recent DGX configurations using Blackwell-era GPUs have demonstrated strong benchmark results and throughput on modern large-language model workloads. That tangible performance lead often translates into shorter training times and quicker returns for AI teams.

Who’s challenging DGX?

Competitors cluster into three groups:

• Specialized AI silicon firms — Companies like Cerebras, SambaNova, and Graphcore offer non-GPU architectures (wafer-scale engines, dataflow processors, IPUs) that can excel at certain high-throughput or memory-hungry training tasks. On particular workloads, these designs can beat GPU clusters, representing a technology-focused threat for cutting-edge research projects.

• OEM/server manufacturers — Dell, HPE, Lenovo and others sell dense AI appliances built around accelerators (sometimes NVIDIA, sometimes alternatives). These vendors package rack-scale systems and managed services that compete directly with DGX for enterprise deployments, often with different pricing and support models.

• Hyperscalers and custom accelerators — Google (TPUs), AWS (Trainium/Inferentia and custom instances), and Microsoft/Azure provide cloud-native acceleration. For many organizations, cloud offerings remove the need to purchase on-prem DGX boxes altogether; the cloud’s elasticity, competitive pricing, and broad availability are compelling for teams that prefer OPEX instead of CAPEX.

Strengths and vulnerabilities — where DGX wins and where it’s exposed

Key advantages for NVIDIA:

- Software and ecosystem: A vast number of frameworks and tools are optimized for NVIDIA’s CUDA ecosystem, making porting and adoption easier for developers.

- Regular hardware cadence: Frequent GPU updates and turnkey DGX releases keep customers able to access the latest performance improvements without heavy integration work.

Main risks:

- Niche hardware advantages: Specialist architectures can offer superior memory capacity, power efficiency at scale, or raw throughput for certain classes of models — important for ultra-large training efforts.

- Cloud economics: Hyperscalers improving price/performance with their own accelerators make cloud alternatives more attractive, particularly for organizations that value flexibility and lower upfront costs.

- OEM and TCO pressures: Server vendors packaging comparable systems, often with different support or pricing, can win customers focused on total cost of ownership or local services.

Short-term outlook (next 2–3 years)

DGX is likely to remain a leading option for organizations that want a turn-key mix of top-tier GPU performance, mature software, and operational simplicity. Its established ecosystem, performance record, and partner network keep it at — or very near — the top for a wide range of enterprise and research workloads.

That said, its dominance will be selectively eroded. Hyperscalers and custom-silicon providers will capture workloads where cloud economics or specialized architectures deliver superior price-performance (very large-scale model training, hyperscale inference, or workloads matched to IPU/wafer-scale strengths). OEMs and regional players will also claim customers that prioritize local support, pricing advantages, or regulatory reasons.

Practical advice

If you’re choosing infrastructure now: DGX remains the safest bet when you need broad compatibility, strong vendor support, and the fastest route to production for mainstream LLM training and inference. If, however, your tasks are extremely large-scale or highly specialized — or if you’re cloud-first and extremely cost-sensitive — evaluate alternatives (Cerebras, Graphcore, SambaNova, and hyperscaler-native accelerators) and benchmark them on your real workloads.

Final thought

NVIDIA’s DGX crown is solid but no longer unchallenged. Its continued leadership will hinge on preserving its software ecosystem and delivering clear cost-and-performance advantages as competitors across the globe advance their hardware and cloud offerings.

DGX vs. 7 Major Competitors — concise comparison

| Vendor / Product | Typical performance profile (relative) | Memory / key hardware notes | Best-fit workloads | Typical TCO signal (qualitative) |

|---|---|---|---|---|

| NVIDIA — DGX (e.g., DGX B200 / DGX Cloud) | Top-tier GPU throughput for mainstream LLM training & inference; frequent public perf records. | Up to 8 Blackwell GPUs per DGX B200; very high aggregate HBM capacity and NVLink fabric. | General-purpose LLM training, mixed AI/HPC workloads, shops wanting turnkey stacks. | High CAPEX for on-prem DGX appliances; lower integration cost due to mature SW stack (CUDA, Triton). DGX Cloud gives OPEX alternative. |

| Cerebras — Wafer-Scale Engines (CS-series, WSE-3) | Exceptional single-system throughput for very large models and some inference workloads; vendor claims top LLM inference speed. | Extremely large on-chip memory and die (wafer scale); system memory and fabric tuned for huge models. | Very large model training where single-system memory and low-latency fabric matter; research centers and specialized labs. | Very high list/system cost (often multi-hundred-thousand to multi-million per system in public reporting); can reduce time-to-result on specific workloads. |

| Graphcore — IPU (GC200, IPU-Machine) | Strong parallelism on graph/transformer-like workloads and models that benefit from on-chip memory; claims high FLOPS per IPU. | Large in-processor memory (e.g., ~900MB IPU on some chips), many cores per IPU; composable IPU pods. | Research and production workloads that benefit from fine-grained parallelism & large model states in-chip. | Mid-to-high CAPEX; attractive when models map well to IPU architecture (lower TCO for those workloads). |

| SambaNova — DataScale / RDUs | Vendor claims high throughput and low latency for inference and certain training pipelines; MLPerf and customer benchmarks vary by workload. | Reconfigurable dataflow units (RDUs) and appliance packaging optimized for throughput/latency. | Large inference fleets, specialized production models, customer deployments needing turnkey appliances. | Quote-based systems (typically large enterprise spends); claims of big speedups on some use cases but needs proof vs. customers’ real workloads. |

| Google — TPU Pods (v4 / v5 / Trillium / Ironwood family) | Very high performance per $ for large distributed training; strong at scale (TPU pod nets). Public hourly pricing for cloud TPU available. | High HBM per chip and pod-scale memory; TPU designs optimized for matrix/tensor ops and scale-out. | Hyperscale training, heavy matrix compute models, cloud-first organizations seeking OPEX flexibility. | Cloud OPEX (hourly TPU pricing published); often lower $/token for very large training runs, eliminates on-prem CAPEX. |

| AWS — Trainium (Trn2) / Inferentia (Inf2) | Trainium2 instances claim 30–40% better price-performance vs comparable GPU EC2 instances; aimed at cost-efficient, large-scale training. | Cloud instances with Trainium chips (Trn2) provide large memory aggregates in instance clusters; Amazon bundles UltraServer/UltraCluster options. | Cloud-first training at scale, teams optimizing for $/train-step and energy efficiency. | Low upfront cost (OPEX hourly); strong $/performance for suitable models — easier regional scaling and pay-as-you-go. |

| Intel / Habana — Gaudi2 | Competitive MLPerf and vendor benchmarks vs GPU alternatives on many training tasks; positions as lower-cost alternative. | Gaudi2 accelerators with server integrations (e.g., Supermicro), good price/performance claims. | Cost-sensitive training clusters, organizations evaluating non-GPU racks for training. | Often lower list price per board than top-end GPUs; TCO advantage on workloads that map well to Gaudi2. |

| AMD — Instinct MI300 series (MI300X, MI325X etc.) | Strong raw FP8/FP16 and memory bandwidth specs vs prior GPU generations; good MLPerf results for inference/training in published tests. | Higher memory capacity per card vs many GPUs and competitive memory bandwidth; OEM rack systems available (Dell/HPE/etc.). | Workloads that need large GPU memory and dense FP8/FP16 throughput; cloud providers and OEM racks. | Competitive pricing expectations per card (public estimates vary); TCO depends on SW maturity (ROCm) and workload fit. |

More articles for the similar topic:

AI Chip Battle Among NVIDIA, AMD, Intel and More Competitors

If Meta Will Dominate the AI Glasses Market

Competing the Skies: Deep Analysis of Major Drone Players’ Advantages and Disadvantages

Wiring Tomorrow’s Workforce: Integrating Smart IoT, 5G, Cloud, and AI to Forge a Robust Robot World

As for in-depth insight articles about AI tech, please visit our AI Tech Category here.

As for in-depth insight articles about Auto Tech, please visit our Auto Tech Category here.

As for in-depth insight articles about Smart IoT, please visit our Smart IoT Category here.

As for in-depth insight articles about Energy, please visit our Energy Category here.

If you want to save time for high-quality reading, please visit our Editors’ Pick here.