As AI, HPC, and data-heavy workloads proliferate, data centers increasingly demand modular systems that deliver high GPU density, flexible I/O, and an upgrade path across CPU, GPU, and DPU generations. NVIDIA’s MGX is a deliberate attempt to set a new standard: a modular reference architecture that lets OEMs build GPU- and DPU-forward servers from standardized building blocks. But is MGX the best choice for modern data centers? Below is a practical analysis of MGX, its strengths and weaknesses, and how it stacks up against established and emerging modular alternatives.

What MGX promises

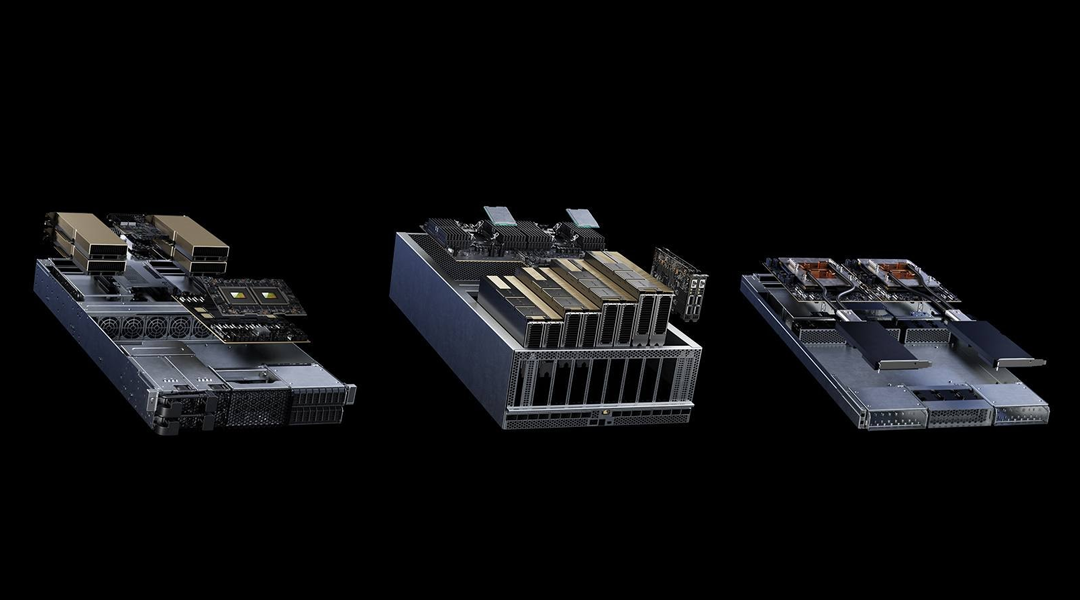

MGX is positioned as a modular reference design that standardizes connectors, power, cooling, and chassis building blocks so system makers can assemble GPU- and DPU-centric servers more quickly and with predictable thermal and electrical characteristics. The architecture is explicitly framed around modern accelerated workloads — supporting multiple GPU generations, DPUs, and a plug-and-play approach to scaling compute and networking. NVIDIA argues MGX reduces time-to-market for vendors while improving ROI for operators who want repeatable, validated configurations.

Strengths that make MGX attractive

- Acceleration-first design: MGX is built from the start to host high-density GPUs and DPUs, aligning with the hardware profile of today’s AI and cloud workloads. That makes it easier to optimize power delivery, cooling, and PCIe/accelerator interconnects for throughput-heavy applications.

- Ecosystem and validation: Because MGX is an NVIDIA-led reference, vendors can lean on validated component stacks and software integrations with NVIDIA’s software ecosystem, reducing integration risk for AI stacks. That ecosystem effect is powerful where TensorRT, CUDA, and NVIDIA networking/DPUs are already preferred.

- Modularity for future upgrades: Standardized mechanical and electrical blocks simplify swapping in next-gen GPUs or DPUs without redesigning the whole chassis — a meaningful advantage for long-lived data-center infrastructure.

Where MGX may not be the universal winner

- Vendor-centric orientation: MGX optimizes for NVIDIA-style acceleration. If an operator wants vendor-agnostic neutrality (to mix GPUs from multiple vendors or emphasize CPU-centric scale-out), MGX’s advantages are less compelling.

- Ecosystem lock-in risks: Deep integration with NVIDIA tooling shortcuts deployment but can make future diversification more costly.

- Thermal & power tradeoffs: High-density accelerator designs demand substantial power and sophisticated cooling; not every data center can absorb that operational cost without facility upgrades. MGX helps standardize the platform, but it doesn’t eliminate infrastructure requirements.

Competitor comparison

- HPE Synergy (Composable Infrastructure) — HPE’s Synergy focuses on software-defined composition of compute, storage, and fabric to present pooled resources via APIs. It’s strong for mixed workloads and hybrid-cloud flexibility, and it emphasizes composability over pure GPU density. For environments that prioritize fluid resource composition and multi-workload orchestration, Synergy remains compelling.

- Dell PowerEdge MX7000 (Modular Chassis) — Dell’s MX family provides modular sleds and third-party GPU modules (e.g., Amulet Hotkey CoreModules) to add GPU density while keeping chassis-level composability. MX7000 is battle-tested in enterprise deployments and supports a broad ecosystem of modules and management tools.

- Cisco UCS X-Series (Modular/Hybrid Design) — Cisco’s UCS X-Series blends blade and rack concepts with cloud-managed operations (Intersight). It targets hybrid-cloud operators who want unified management and flexible node choices — a pragmatic option if unified operations are the priority.

- Traditional OEM and ODM GPU systems (Supermicro, ASUS, MSI) — Vendors like Supermicro and other OEMs continue to offer dense GPU servers and chassis that OEMs can customize. These provide high performance and vendor variety but often require more integration effort compared with a standardized reference like MGX.

Recommendation — when MGX is the best choice

MGX is an excellent first choice when your data center strategy is explicitly GPU/DPU-centric: large-scale model training and inference, AI factories, and HPC clusters where NVIDIA software and networking are already central. The modular reference speeds OEM validation and ensures thermal/power compatibility across generations, which matters when you’re buying at scale.

Recommendation — when to consider alternatives

If you need a vendor-neutral, composable platform for mixed workloads, or if unified operations and long-term heterogeneity (multi-vendor GPUs or CPU-first scaling) are top priorities, solutions like HPE Synergy, Dell MX7000, or Cisco UCS provide stronger multi-purpose flexibility and a broader choice of modules and management ecosystems.

Not a one-size-fits-all “best”

NVIDIA MGX is not a one-size-fits-all “best” — but it is arguably the best fit for data centers that plan to commit to accelerated computing at scale and want a standardized, validated path to high GPU/DPU density. For mixed, vendor-agnostic, or strictly composable infrastructures, established modular offerings from HPE, Dell, and Cisco remain highly competitive and may better match broader enterprise requirements. Choose MGX when acceleration-first performance and NVIDIA’s end-to-end stack are strategic; choose composable platforms when workload diversity and vendor flexibility matter more.

More articles for the similar topic:

Should NVIDIA IGX Orin Be the First Choice for Enterprise Edge AI?

Can NVIDIA’s DGX Platform Keep Its Crown? Deep Analyses

AI Chip Battle Among NVIDIA, AMD, Intel and More Competitors

As for in-depth insight articles about AI tech, please visit our AI Tech Category here.

As for in-depth insight articles about Auto Tech, please visit our Auto Tech Category here.

As for in-depth insight articles about Smart IoT, please visit our Smart IoT Category here.

As for in-depth insight articles about Energy, please visit our Energy Category here.

If you want to save time for high-quality reading, please visit our Editors’ Pick here.