As enterprises push AI workloads from the cloud into the field — for manufacturing vision, robotics, smart cities, and safety-critical systems — choosing the right edge platform is as strategic as picking a cloud provider. NVIDIA’s IGX Orin presents itself as a purpose-built, enterprise-grade edge AI platform that combines high compute, functional safety features, and NVIDIA’s software ecosystem. But is it the best first choice for enterprises? Below I analyze IGX Orin’s strengths and limitations and compare it with major competing edge AI options.

What IGX Orin brings to the table

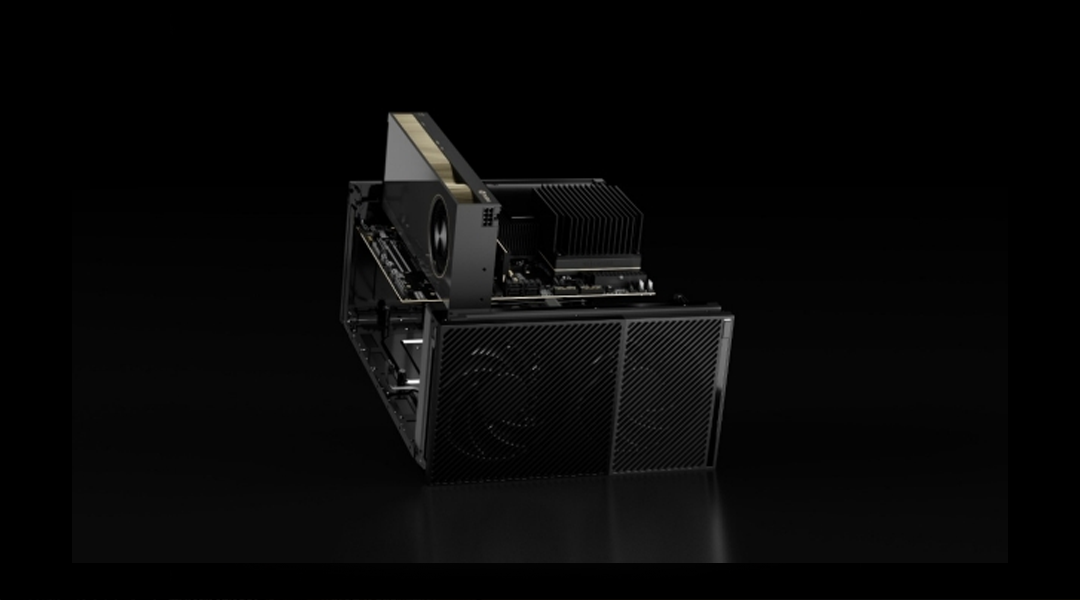

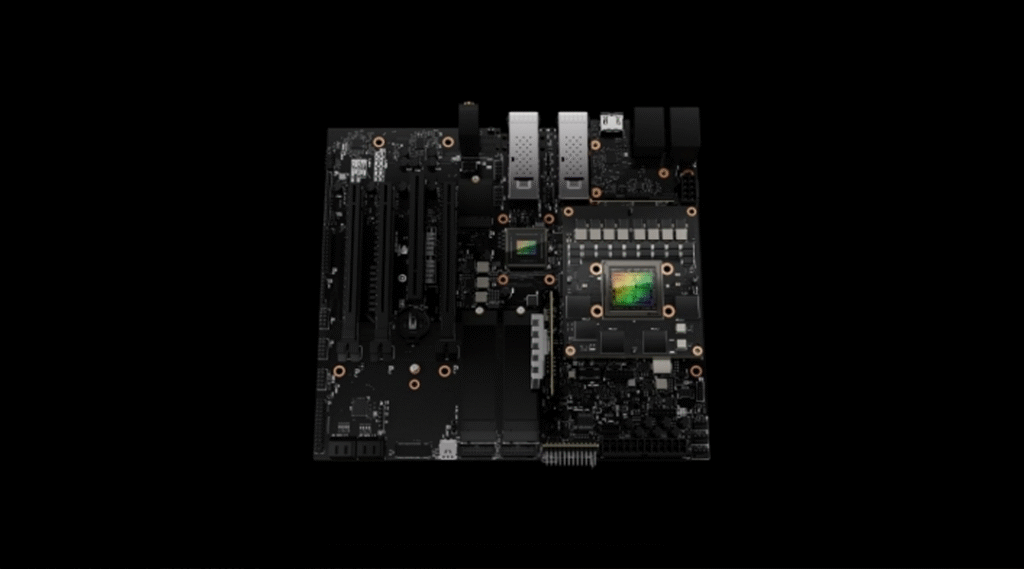

NVIDIA positions IGX Orin as an industrial-grade platform designed for mission-critical edge AI. The IGX family includes the IGX Orin 700 (a MicroATX board kit) and the IGX Orin 500 (a System-on-Module), delivering up to 1,705 TOPS (with an optional discrete RTX GPU) and a high-bandwidth IO stack (dual 100 Gb/s ConnectX-7), BMC management, and on-board safety/Root-of-Trust components. It also targets functional-safety workflows (ISO 26262 / IEC 61508 capable) and offers enterprise software bundles (NVIDIA AI Enterprise IGX) for long-term support and secure management.

Those facts translate into several practical strengths for enterprises:

- High raw inference throughput and optional dGPU scaling — useful where many camera streams or large models must run locally with low latency.

- Enterprise manageability and networking — built-in ConnectX networking and BMC (remote management) ease deployment at scale.

- Software and ecosystem advantage — compatibility with NVIDIA’s AI stack, SDKs, and long-term enterprise support reduces integration risk.

- Safety & security features — IGX explicitly targets functional safety and includes hardware security primitives, which is essential for regulated or safety-critical deployments.

Where IGX Orin may not be the best fit

- Cost and power envelope: IGX Orin’s high TOPS and optional dGPUs imply higher cost and power vs. micro-accelerators. For low-cost, ultra-low-power edge nodes (e.g., mass-deployed telemetry), a smaller accelerator may be preferable.

- Vendor lock-in risk: heavy reliance on NVIDIA’s stack can accelerate deployment but can also entrench a single-vendor path, which matters for long procurement cycles.

- Overkill for tiny models: If the workload is small-footprint models with minimal throughput needs, IGX’s capabilities may be unnecessary.

Comparison: IGX Orin vs. major competitors

| Category | NVIDIA IGX Orin | Qualcomm Cloud AI 100 | Google Coral / Edge TPU | AMD (Xilinx) Versal AI Edge | Intel Movidius / NCS |

|---|---|---|---|---|---|

| Peak TOPS | Up to 1,705 TOPS (with optional RTX dGPU). Integrated Orin SOM up to 248 TOPS. | Up to ~350–400 TOPS (varies by card/form factor); designed for PCIe accelerator cards. Good performance/watt. | Edge TPU: ~4 TOPS per TPU (very low power, extremely efficient for quantized models). Best for tiny, power-sensitive edge nodes. | Versal AI Edge claims very high AI performance/watt (hundreds of TOPS in some SKUs) and strong real-time, safety-focused features; ACAP flexibility for mixed workloads. Good for deterministic, safety-critical systems. | Movidius / NCS devices are small VPUs (single-digit to low-double-digit TOPS historically). Great for prototyping and low-power inference via OpenVINO. |

| Form factor / deployment | MicroATX / SOM / OEM-certified systems; enterprise lifecycle & BMC | PCIe cards or M.2 / server integrations | USB / dev-boards / SoM for tiny devices | SoC/ACAP modules or custom boards; OEMs for rugged systems | USB sticks / SoM / low-power modules |

| Software ecosystem | NVIDIA AI Enterprise, CUDA, TensorRT, Isaac, long-term ISV support. | SDKs for inference; growing ecosystem (Cloud AI SDK). | TensorFlow Lite + Edge TPU runtime; limited to quantized models. | Vitis/Accel libraries; strong for custom pipelines and real-time control; more engineering effort. | OpenVINO toolchain; good engineer productivity for certain models. |

| Safety / industrial readiness | Designed for industrial/functional safety (ISO/IEC capable), BMC, ERoT. | Hardware root-of-trust and server-grade features on some designs. | Not targeted at safety-critical, more for lightweight sensing and prototypes. | Targeted at edge safety-critical use cases (ISO 26262, IEC 61508 mentions). | Generally developer-focused; safety deployments require integration work. |

Recommendation — when IGX Orin should be first choice

IGX Orin is an excellent first choice for enterprises when the project requirements include:

- High aggregate inference throughput or multiple high-resolution video feeds,

- Strong needs for enterprise manageability, networking throughput, and remote provisioning,

- Safety-critical or regulated applications where ISO/IEC capabilities and hardware security matter, and

- A desire to shorten time-to-production using NVIDIA’s software and partner ecosystem.

Recommendation — when to consider alternatives

If your deployment is constrained by strict power, cost, or you need many thousands of extremely low-cost endpoints (where Edge TPU or Movidius-like devices shine), or you require a non-NVIDIA heterogeneous architecture for long-term diversification (e.g., ACAP/Versal for deterministic control), evaluate those alternatives carefully. Qualcomm’s Cloud AI 100 provides a strong PCIe accelerator option where NVIDIA is not required; Xilinx/Versal offers a different tradeoff (ACAP flexibility and safety orientation) but requires deeper engineering.

A compelling first choice

For enterprise-grade, mission-critical edge AI where throughput, security, manageability, and safety certifications matter, NVIDIA IGX Orin is a compelling first choice thanks to its performance, connectivity, and mature software ecosystem. For ultra-low-power nodes, highly cost-sensitive mass deployments, or organizations explicitly avoiding NVIDIA lock-in, select alternatives (Google Coral, Qualcomm Cloud AI 100, Versal ACAPs, or Intel VPUs) based on the precise technical and procurement constraints.

More articles for the relative topic:

Can NVIDIA’s DGX Platform Keep Its Crown? Deep Analyses

AI-Powered Battery Management Systems: The Next Frontier in Energy Intelligence

AI Chip Battle Among NVIDIA, AMD, Intel and More Competitors

As for in-depth insight articles about AI tech, please visit our AI Tech Category here.

As for in-depth insight articles about Auto Tech, please visit our Auto Tech Category here.

As for in-depth insight articles about Smart IoT, please visit our Smart IoT Category here.

As for in-depth insight articles about Energy, please visit our Energy Category here.

If you want to save time for high-quality reading, please visit our Editors’ Pick here.